Summary: Dive into the dynamic world of split testing, an indispensable strategy for digital marketers aiming to elevate their website conversion rates and optimize user engagement. This comprehensive guide demystifies A/B testing, from debunking common misconceptions to revealing how accessible tools like Optimizely or Omniconvert can revolutionize your marketing efforts. Whether you’re a novice eager to understand the basics of split testing or a seasoned marketer seeking advanced insights into multivariate tests (MVT) and landing page optimization, this article is your all-in-one resource. Learn how to calculate sample sizes accurately, select the optimal pages for testing, and navigate the challenges of split testing to achieve measurable improvements. Embark on this journey to unlock the full potential of your online presence, guided by data-driven decisions and industry best practices.

Introduction to Split Testing

Before we dive into the basics of what is split testing and explore landing page optimization tools, let’s address some common misconceptions:

- Split tests, also known as A/B testing, are expensive.

- They require a ton of technical knowledge.

- They require a lot of setup time.

However, with tools like Omniconvert, Optimizely, or VWO, split testing has become more accessible than ever. If these misconceptions keep your team from running A/B tests, it’s time to reconsider. Split testing is crucial to website optimization, enabling marketers to make data-driven decisions. Landing page optimization and optimizing mobile conversion rates are fundamental aspects of digital marketing strategies to improve website conversion rates.

Technical expertise for split testing is a low bar to clear. If you can add a Google Analytics script to your site, you’re well-equipped to handle the setup for split testing.

Defining Goals and Objectives

Firstly, clearly define your goals and objectives for the test. Understand the specific metrics you aim to improve: click-through rates, conversion rates, or user engagement.

Secondly, gain a solid understanding of your target audience. Tailoring your test variations based on audience segments can yield more insightful results and informed decisions.

Ensure a systematic and consistent testing process. Focus on changing one variable at a time to measure each change’s impact and avoid confounding results accurately.

Lastly, prepare to analyze the data gathered from the split test objectively. Consider not only the outcome but also the insights gained, which can inform future optimization strategies.

Split testing is more than a technical endeavor; it’s an integral part of a layered conversion optimization plan. Understanding its proper use is crucial for effective implementation.

The Value of Split Tests in Digital Marketing

Split testing, often referred to as A/B testing or A/B split testing, offers invaluable insights into user preferences and behavior. It directly impacts landing page optimization and overall website conversion rates. But when should companies opt for split tests?

If you’re looking to Launch a new page, improving the checkout flow or diagnosing user interaction failures might not always necessitate a split test. A usability or user acceptance testing might be more appropriate in such scenarios.

However, split testing becomes invaluable when faced with two competing visions for a vital page. What is split testing but a method to empirically determine which version of a page performs better in real-world conditions?

What should I check to ensure everything works before a split test goes live?

A thorough examination of all campaign components is crucial. Ensure uniformity in appearance across different browsers, verify the functionality of your call-to-action (CTA) button, and check that all advertisement links direct users correctly. This level of quality assurance helps maintain the integrity and accuracy of your results, laying the groundwork for successful split testing.

The Anatomy of a Split Test

At the core of split testing are two main components: the Champion and the Challenger:

- Champion: This page is your current best performer, often a high-traffic page that, despite its success, may still suffer from high bounce rates or low conversion rates.

- Challenger: Crafted based on hypotheses about potential improvements, the challenger page is designed to test whether these changes will outperform the champion.

This process is not just about confirming which page performs better but also about gathering actionable data. Split testing tools and A/B testing marketing strategies leverage this data to refine the user experience and optimize conversion paths.

How do I calculate the sample size needed for a split test?

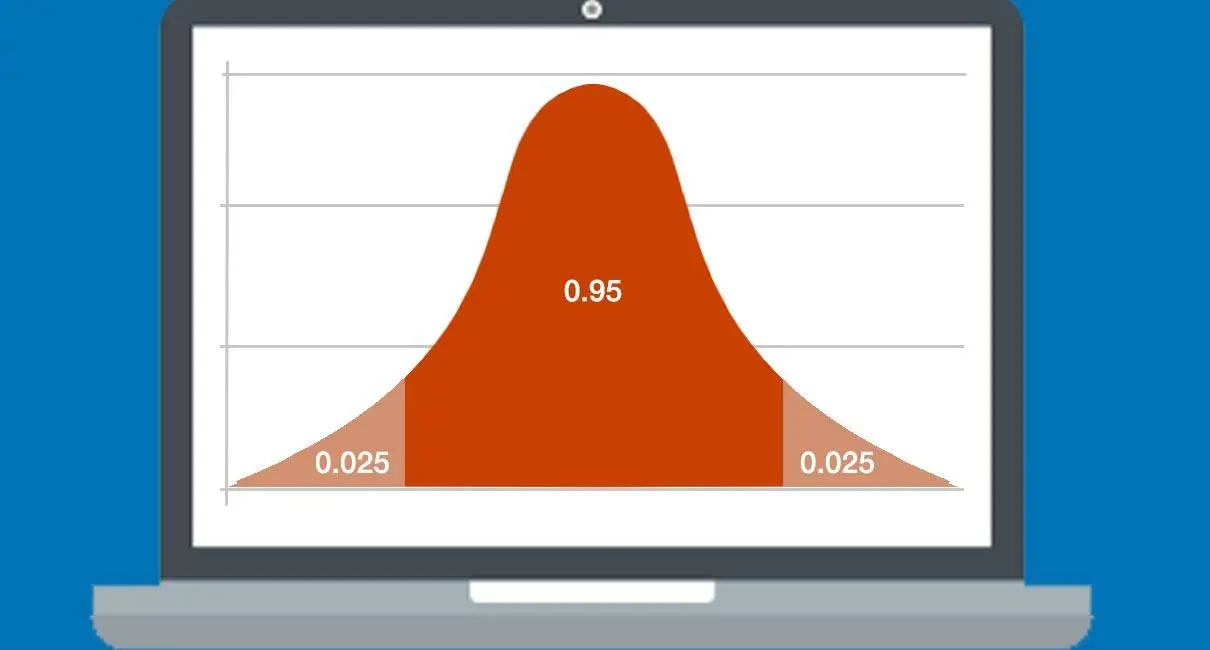

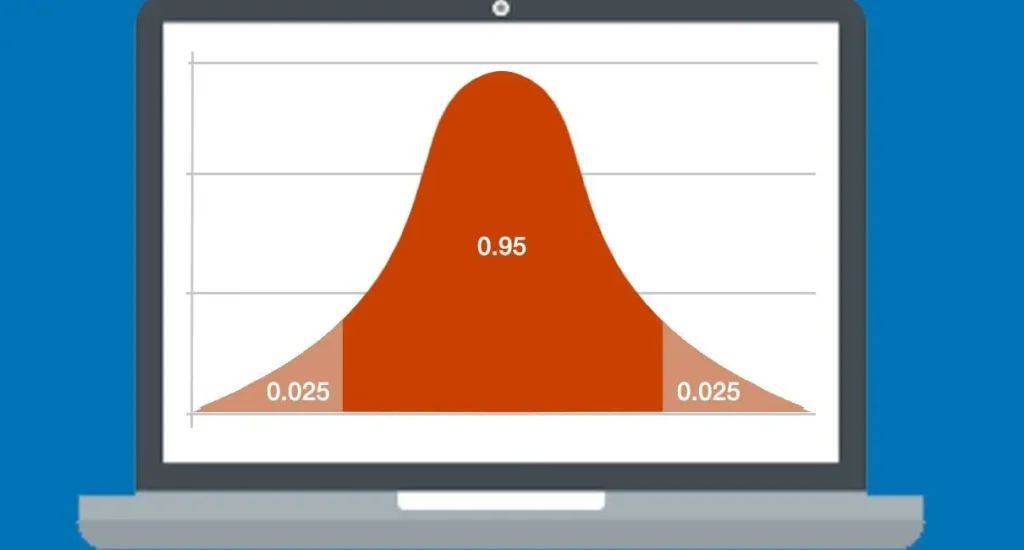

The foundation of any reliable split test is statistical significance. Tools like Optimizely’s calculator help determine the required sample size by considering the baseline conversion rate, the minimum detectable effect, and the desired significance level. This ensures that your test has enough data to draw accurate conclusions.

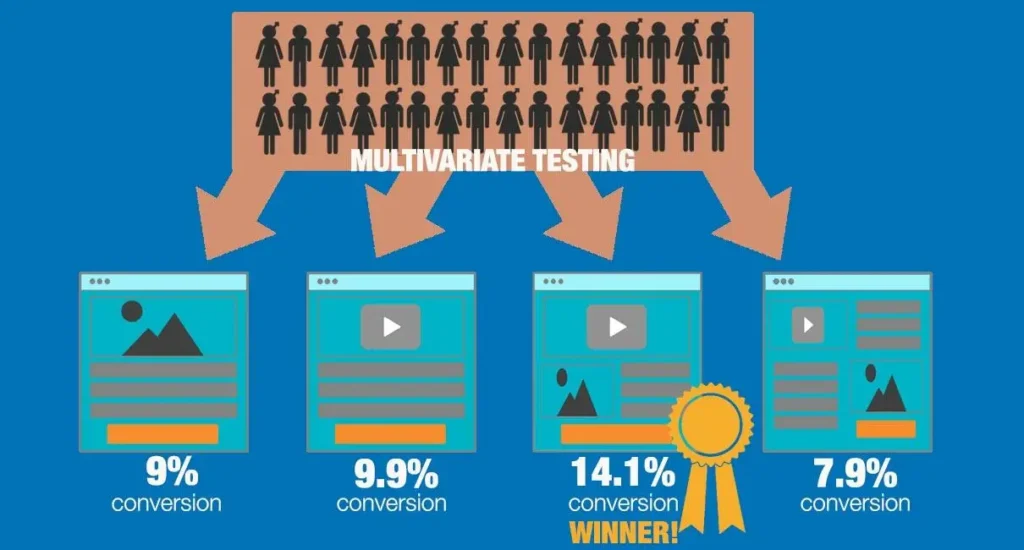

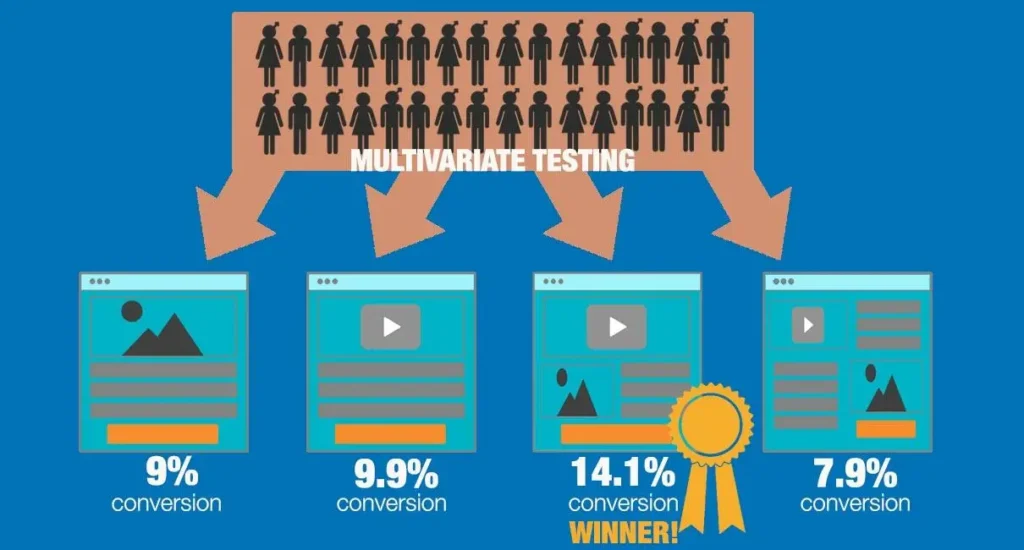

Multivariate Tests (MVT)

Managing variables effectively

Multivariate testing (MVT), a complex form of A/B testing, examines multiple variables simultaneously to see how combinations affect user behavior. This method is particularly suited for high-traffic sites and is an advanced tool for optimizing landing page and conversion rate optimization services.

How can I eliminate confounding variables during a split test?

– Ensuring the reliability of your split test results involves meticulous control over external factors. Maintaining consistency across variables such as traffic sources and testing periods is crucial to minimizing the impact of external factors and obtaining accurate results.

Eliminating confounding variables

Eliminating confounding variables, which are factors other than the one being tested, could influence the outcome of your experiment. This is a critical step in ensuring the validity of your split testing results. Identifying and controlling for confounding variables is essential to isolating the effects of the changes you’re testing, whether for landing page optimization, improving website conversion rates, or optimizing mobile conversion rates.

– Consistency Across Variables: To mitigate the influence of external factors, it’s crucial to keep conditions as consistent as possible across both the champion and challenger pages. This includes traffic sources, time of day, and external marketing activities.

– Segmentation: Proper segmentation of your audience can also help control for these variables. Tailoring your tests based on audience behavior or characteristics ensures that the insights you gain are relevant and actionable.

– Use of Analytics: Leveraging split testing and web analytics tools can provide additional insights into potential confounding factors. Google Analytics split testing, for example, allows you to monitor and adjust for unexpected shifts in traffic or behavior that could skew your results.

By systematically addressing confounding variables, you can enhance the reliability of your split testing efforts. This step is crucial for businesses focused on conversion rate optimization and those seeking to leverage split testing to improve conversions effectively.

Looking to get up to speed quickly with A/B split testing? Check out SiteTuners’ A/B Split Testing Crash Course for essential insights and best practices.

Explore the A/B Split Testing Crash Course.

Choosing the Right Tools for Split Testing

Choosing the right split testing tools is crucial for any digital marketer aiming to improve their site’s performance. Platforms like OmniConvert, Optimizely, or VWO provide comprehensive features for A/B split testing, multivariate & split URL testing, and more. These tools are essential for effective landing page optimization and conversion rate optimization services.

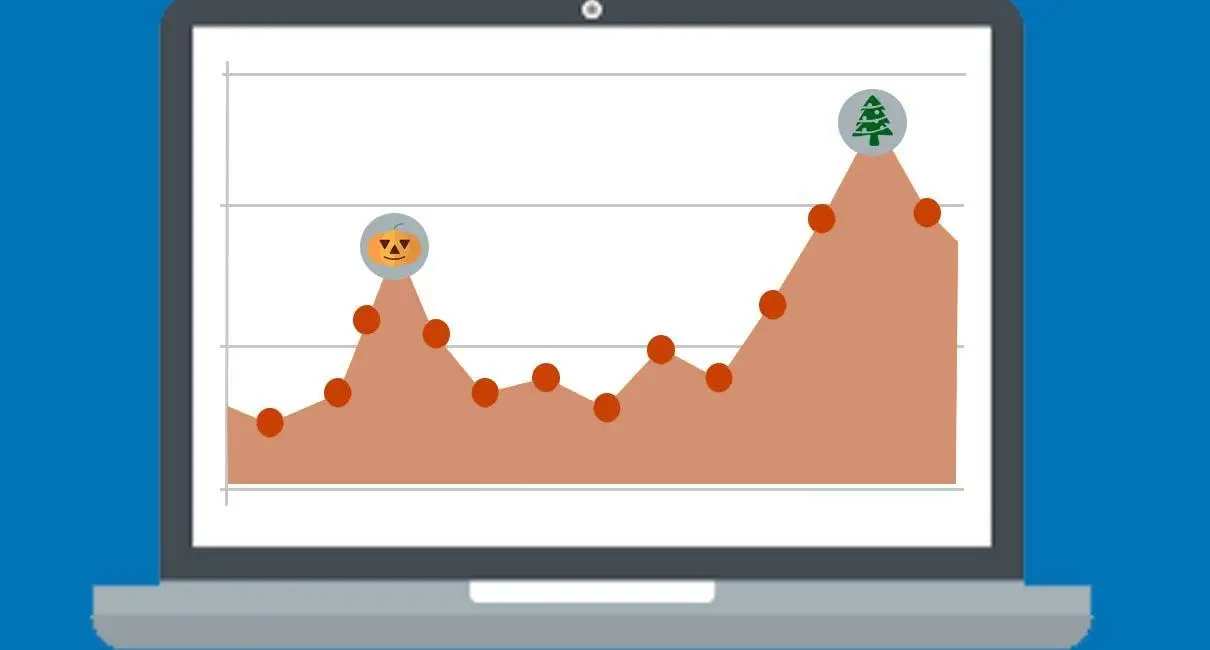

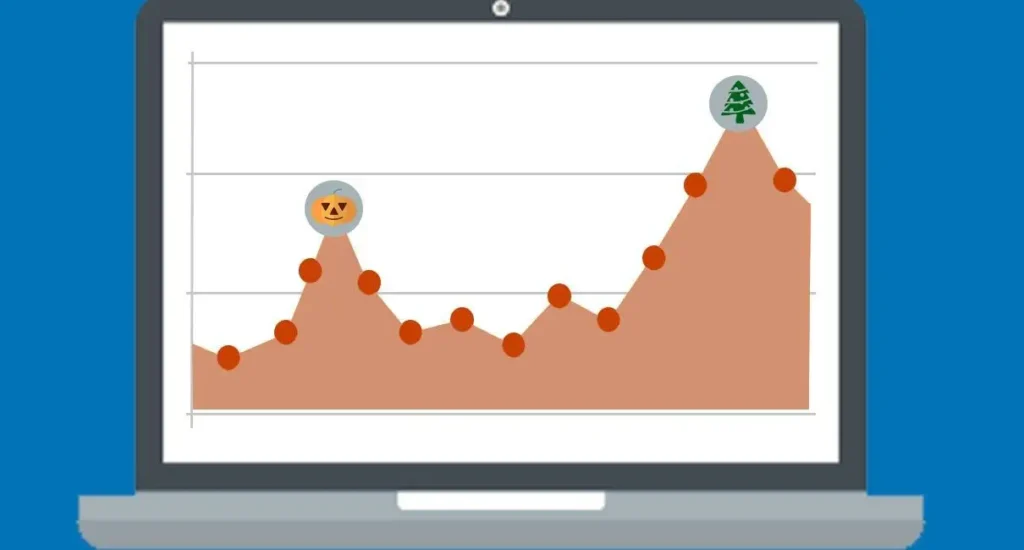

Traffic/seasonality considerations are also vital when planning your tests. Ensuring consistent traffic to your test pages helps obtain reliable data, a cornerstone of successful split testing to improve conversions.

Identifying Optimal Pages for Testing

The key to split testing success is selecting the correct pages. High-traffic pages with suboptimal performance metrics are prime candidates for landing page optimization.

Web analytics tools can illuminate which pages benefit most from A/B split testing. Furthermore, incorporating user feedback can provide qualitative insights that guide your split testing efforts.

For instance, if your analytics tool is Google Analytics, you can utilize a weighted sort feature to discern pages that fall within the sweet spot of high traffic but low engagement. This makes the process of hunting for optimal testing pages more efficient.

How do you run a split test on a landing page?

Begin with this strategic selection process. Remember, split testing isn’t just about changing elements at random; it’s about making informed decisions based on data.

From your survey tool, you need to know the tasks people are trying to perform and how successful they are at those tasks. There are a few questions you need to include in your website survey, but the most critical ones are these:

- What are you looking for on the website?

- Did you find the information you were looking for?

Where in a web analytics tool you’d find the high traffic, low interaction pages, in the surveys, you’d need to find the intersection of two things:

- Tasks that people care about most

- Tasks where they fail a lot

Once you find that intersection, you can find a critical page in that section to test.

Elements Worth Testing

Regarding split testing, nearly every element on your page can be tested. However, some areas are more likely to influence user behavior and conversion rates:

- Headlines: The power of a compelling headline can’t be overstated in landing page optimization.

- Call-to-action (CTA) Buttons: Variations in text, color, and placement can significantly impact click-through rates.

- Images and Videos: Visual elements are crucial in engaging users and can be pivotal in optimizing mobile conversion rates.

- Form Fields: Simplifying forms can lead to an increase in user submissions and improve website conversions overall.

Each element tested brings you closer to understanding the optimal configuration that resonates with your audience.

Expected Outcomes of Split Testing

The ultimate goal of split testing, from A/B tests to more complex multivariate & split URL testing, is to drive actionable insights that lead to measurable improvements. Expected outcomes include:

- Increased Conversion Rates: Optimizing elements based on test results can significantly enhance your site’s conversion rates, whether through improved landing page optimization or more effective CTAs.

- Reduced Bounce Rates: Effective split testing can lead to more engaging content, keeping visitors on your site longer.

- Enhanced User Engagement: Tests focusing on user experience can increase engagement metrics such as time on site and page views per session.

- Better ROI on Marketing Spend: Optimizing conversion rates improves the utilization of your marketing budget, increasing the overall return on investment.

Duration and Timing of Tests

How long should the tests run? Achieving statistical significance is crucial in determining the duration of your split tests. This depends on your site’s traffic volume and the variability of your metrics.

- Aim for Statistical Significance: Use split testing tools to calculate when your test results have reached a confidence level indicating a fundamental difference between variations.

- Traffic Volume Considerations: Sites with higher traffic can reach significant results faster than those with less traffic.

- Monitor and Adjust: Based on the data, be prepared to extend or shorten your testing period. Consistent monitoring ensures that you’re not testing longer than necessary.

SEO Considerations in Split Testing

Split testing can coexist harmoniously with SEO strategies when correctly managed:

- Maintain Consistency for Search Engines: Ensure your test variations don’t stray significantly from your original content to avoid SEO penalties.

- Avoid Cloaking and Ensure Proper Use of Canonical Tags: Make sure search engines know which version is preferred for indexing.

- Monitor SEO Metrics: Monitor your search rankings and organic traffic during and after tests to ensure no adverse effects.

Effective split testing management with Google Analytics and adherence to SEO best practices can enhance your site’s performance without sacrificing search visibility.

Limitations and Pitfalls of Split Testing

Split testing transcends mere optimization; it embodies a philosophy of relentless enhancement and empirical decision-making. Seamlessly incorporating split testing into your comprehensive digital marketing strategy empowers you to ensure every adjustment is data-backed, significantly amplifying your online presence’s efficacy.

However, it’s crucial to recognize that split testing, like any powerful tool, comes with challenges and constraints. The journey of split testing—from deciphering what split testing is to navigating through A/B testing best practices and employing conversion rate optimization tools—is marked by a spectrum of potential pitfalls:

Understanding Limitations: While split testing unveils numerous opportunities for improving website conversions and user experience, its effectiveness is contingent on various factors, including the volume of traffic to your site and the clarity of the testing goals. Acknowledging these limitations is essential for setting realistic expectations and strategies.

Common Mistakes: Pitfalls such as testing too many variables simultaneously or misinterpreting results can derail your optimization efforts. Embracing a structured approach to split testing, focusing on one change at a time, and employing robust analytics for interpretation can mitigate these risks.

Strategic Pivot Points: Recognizing when split testing yields diminishing returns or when a test is steering you away from your core objectives is crucial. The ability to pivot based on data rather than persist with ineffective strategies underscores the adaptive nature of successful digital marketing campaigns.

Beyond the confines of metrics, split testing’s true essence lies in its capacity to deepen one’s understanding of one’s audience. This insight is instrumental in crafting more targeted, effective marketing strategies and enhancing engagement across all digital touchpoints.

As we tackle the intricacies of split testing, embracing both its potential and its challenges, we unlock the capability to refine our online experiences and forge stronger connections with our audience. Whether our goals encompass enhancing user experience, boosting website conversions, or making more informed marketing decisions, split testing is a pivotal tool in our digital optimization arsenal, offering a pathway to achieving those goals.

Conclusion: The Integral Role of Split Testing

Split testing is more than just an optimization tool; it’s a mindset that encourages continuous improvement and data-driven decision-making. By integrating split testing into your broader digital marketing strategy, you can ensure that every change you make is informed by actual user data, maximizing the effectiveness of your online presence.

Remember, the aim is to improve metrics in isolation and understand your audience better and how they interact with your site. This deeper insight allows for more targeted and effective marketing efforts, driving better results across all your digital channels.

As we’ve explored the essentials of split testing, from its definition to advanced A/B testing best practices and conversion rate optimization tools, the potential benefits are vast. Whether you’re looking to improve website conversions, enhance user experience, or make more informed marketing decisions, split testing offers a pathway to achieving those goals.

Before we dive into the basics of split testing, let’s get a few misconceptions out of the way:

- Split tests are expensive.

- They require a ton of technical knowledge.

- They require a lot of set up time.

If that’s what is keeping your team from running A/B tests, then you should absolutely get started.

It’ll take you a few minutes to set up a test with a basic free tool like Google Content Experiments (although Google now recommends Optimize as their testing platform).

Technical expertise for split testing is also a low bar to clear. If you have someone who can plug in the Google Analytics script for a site, then you have someone who can plug in similar scripts for the testing code.

It’s not the technical aspects that you should worry about – it’s everything else. Split tests should be one part of a multi-layered conversion optimization plan. And to use it that way, you need to understand how to use split tests properly.

Why should companies use split tests?

- If you’re looking to launch a new page or section of the website, or improve the flow of the cart, or find out why people are failing to do what they need to do, you DON’T need a split test. You need a usability test.

- If you’re looking to ensure that features don’t error out when launched, causing conversion dips, you DON’T need a split test. You need to rely on a user acceptance test.

At the most basic level, you’ll need a split test when you have two competing ideas about how to show an important page.

The underlying assumption there is that you should have a process for coming up with those types of ideas regularly. You should not run your split testing efforts as a fully separate thing from your other online marketing efforts.

What’s in a split test?

The basic components of a split test are a champion page and a challenger page:

Champion

This is traditionally a high-traffic page (so it’s worth fixing) that either has a high bounce rate or a low conversion rate (so a challenger page is needed).

Challenger

This is generally a page similar to the champion, but with a few tweaks made based on theories about how the page can be improved.

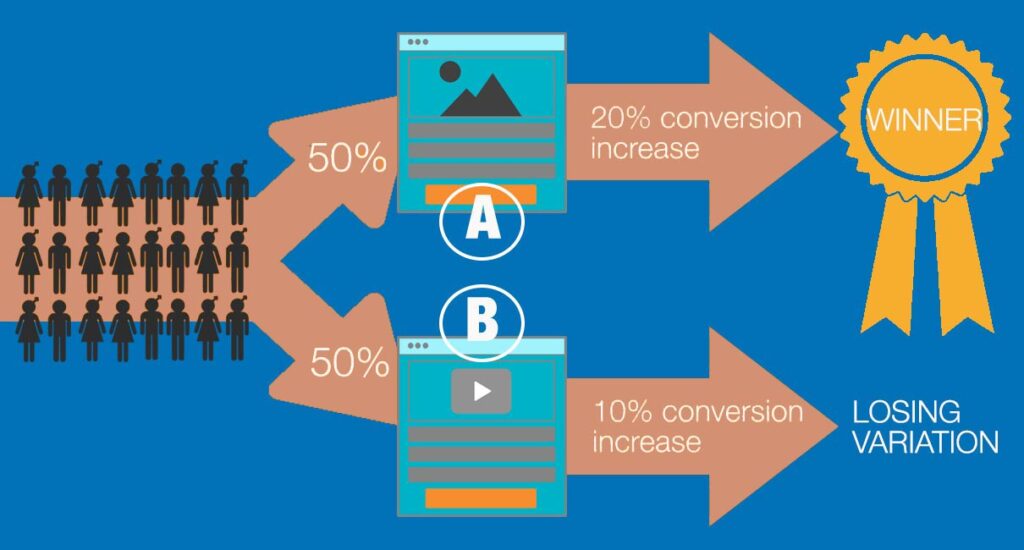

What typically happens is, half the traffic gets sent to the champion page, and the other half goes to the challenger page. Some metric like bounce rate or clickthroughs gets measured, and over time, one of the page wins.

What about MVT?

Multivariate tests (MVTs) are a close cousin of split tests.

Instead of testing one variable between two pages – like the placement of the call-to-action or the presence of trust symbols above the fold – multivariate tests split the traffic among multiple pages, testing different combinations of elements at the same time.

There’s a higher traffic bar to clear to hold effective multivariate tests, but if you have a high-traffic site, it can be a more efficient way to test multiple variables compared to running several split tests for the same page.

If you’re just starting out, don’t worry about multivariate tests too much, for now. You’ll get plenty of bang for your buck doing split tests on the most critical pages.

What do you need for split tests?

It doesn’t take a lot to get started with split tests, but you do need to think about a two things:

Split testing tool

The free version of Google Optimize might be a good place to start if you have no experience with split tests, so you can see if it fits your needs.

When you hit the ceiling of what you can do with the tool, you can consider using paid tools. You can get demos from tools like Optimizely or VWO.

Traffic/seasonality

As much as possible, you want to ensure that the traffic to the site doesn’t change drastically during the test. That means if your traffic shifts drastically during the holidays, or if you’re expecting a change in the makeup of your traffic, split tests are going to be less reliable.

Are there any companies that shouldn’t run split tests?

Before you actually run your tests, make sure you’re testing areas that can actually be improved by split tests, and you have either the internal resources to run tests or a viable third party to help you conduct the AB tests.

- If you don’t have Google Analytics or a similar tool installed to look for viable pages to test, you may want to delay running AB tests.

- Don’t test areas that have existing crippling errors. Fix the big issues first before running the tests.

- If you have fewer than 10,000 to 20,000 visits per month for traffic based split tests, or below 10 conversions per day, AB testing might not be right for your organization yet.

- If you don’t have someone to plug in the scripts, and make the winner of the test the official version that lives on the site, you might want to delay holding tests.

How do you find pages to test?

There are a few things that can help you find the main pages to test: a web analytics tool, a survey tool, and some basic smarts about how to use both.

Web Analytics

From your web analytics tool, you need to filter down to high traffic pages, so the page is worth improving. But it also helps if you find the high traffic pages where people don’t act, so you know you have a lot of room for improvement. Those two things tend to not be all that well correlated, so you have to dig for a bit to find that intersection.

If your web analytics tool is Google Analytics, you can use a handy feature called weighted sort to find pages that are inside that intersection, making hunting for pages a little easier.

Survey

From your survey tool, you need to know the tasks people are trying to perform, and how successful they are at those tasks. There are a few questions you need to include in your website survey, but the most critical ones are these:

- What are you looking for on the website?

- Did you find the information you were looking for?

Where in a web analytics tool you’d find the high traffic, low interaction pages, in the surveys, you’d need to find the intersection of two things:

- tasks that people care about most

- tasks where they fail a lot

Once you find that intersection, you can find a critical page in that section to test.

What elements should you test?

Once you have the “champion” page identified based on data from the tools or business priorities, you need to formalize a theory about how to improve the page. Here are a few things you can try:

- Headline. Try to make it clearer on the challenger page. Or have it match the source of the traffic if the source is under your control (email, AdWords, etc.).

- Images. Try to have it be authentic (as opposed to stock photos). And if it’s a face, make it look towards the call-to-action to direct the users’ attention.

- Call-to-action (CTA). Try a different location. Or try to add contrast with the color palette used by the rest of the website, so that it stands out.

- Form fields. Try reducing the number of fields on the challenger page.

What outcomes should I look for?

You can establish a few different kinds of goals for the split tests, depending on what type of page it is.

Bounce rate

Reducing the bounce rate can be a viable goal if you are testing an entry point or a page largely designed for navigation.

Clickthroughs to CTAs

Use increased CTA clickthroughs to conversion points if you have a dedicated landing page or a product detail page. That ultimately leads to better sales.

However, this comes with a small caveat: if you have multiple CTAs and they lead to product purchases with different profit margins, you should take that into account. It’s possible to increase CTA clickthroughs while reducing average order value.

How long should the tests run?

Generally, split tests should run as long as it takes to get a result with 90 or 95% confidence that the winner hasn’t been picked based on chance.

Getting to that level requires at least one of two things:

- A very high data rate, so that you can deliver results quickly

- Leaving the test on for a long time, so that you can get a reliable result even if the page being tested isn’t visited very much

The longer you run your test, the higher the seasonal traffic risk. You’ll also be exposed to changes in the set of visitor types your site gets and other factors. This is one of the reasons it’s usually a good idea to test pages that tend to get a lot of traffic regardless of bounces or clickthroughs to CTAs.

One thing to remember – as soon as your tool produces results with the right level of statistical significance, end your test. You run additional risks by leaving tests that should have been concluded running, like search engine penalties.

Do split tests carry any risks for SEO?

Split testing carries some minor search engine optimization risks, but only if you get the technical aspects of split testing wrong.

- If you leave your tests running indefinitely even after your tool has produced a result, you risk running into search engine penalties. Search engine spiders generally like to “see” the same things that users see when they crawl a site. And split tests that run forever basically goes against that.

- When you have a champion page URL and a challenger page URL, it’s generally good practice to point to the champion page as the “canonical” URL. If you’re not familiar with tools like “rel=canonical” in-house, make sure you work with a firm that can use it to make sure your split tests follow best practices.

What are the limitations of split tests?

While split testing is great, you’ll quickly hit the ceiling of its usefulness if you expect more from it than what it is suited to deliver. Split tests are not great at solving some issues:

Hitting the global maxima

Split tests will incrementally get you to the local maxima of what your current website design allows for. For anything more than that, you might need a website redesign – split tests will not get you there.

Fixing fundamental site issues

AB tests tend to be good for individual page improvements. If you have large scale problems like mega-menu problems and broken paths, split tests will not help you with the big picture very much.

Lack of conversion optimization plan

Split tests run once in a blue moon when management has the time for it is a hobby, not a program. You’ll get some gains, but you will not get the momentum you’d typically see from a proper conversion rate optimization plan. Split tests will not get very far as an isolated tool – you need a CRO program that included split tests.

Avoid Split Testing Pitfalls

Split tests are a great way to improve critical pathways on your site – whether they are navigation pages where people tend to bounce or custom landing pages where the lack of clicks to your CTA hurts the business.

When you have your original page as the champion and an idea you’re testing as a challenger, you can settle a lot of debates while using data. This saves the company’s time and energy rather than having protracted interdepartmental arguments about what works on the website.

That said, you do need to avoid some pitfalls:

- Don’t use split tests when what you need is a usability test.

- Don’t use AB tests when what you need is user acceptance testing.

- Don’t assume split tests are right for you automatically. Look at your data first.

- Don’t try to use split tests if you can’t add things like Google Analytics scripts to the site.

- Don’t use AB tests without having a clear goal for the page you’re testing.

- Don’t leave split tests running longer than they need to run. There is a penalty risk.

- Don’t confuse running split tests with having a conversion rate optimization strategy.

If you start having regular split tests as a part of a larger conversion program, you can get pretty significant gains for your site.