Summary: Google Search Console (GSC) is essential to a digital marketer’s toolset. It pays to understand the basics, from enabling GSC on your website and learning what users search to checking for indexing issues.

In this article, we’ll talk about the basics of Google Search Console that digital marketers need to know:

Introduction: What Is Google Search Console

1. Adding a Google Search Console Property

2. Learning What People Are Searching For

3. Letting Google Know What to Crawl First

4. Checking If a Page Can be Indexed

5. Checking Blocked Pages

Conclusion: Understanding Google Search Console Basics

Introduction: What Is Google Search Console?

Google Search Console or GSC (formerly Google Webmaster Tools) is a versatile free marketing software. While SEOs tend to be familiar with it, most marketers, conversion specialists, and website designers stand to gain something from understanding the basics.

At its core, Google Search Console is a tool that …

- … allows marketers to see what terms people use to get to the website, and

- … provides an avenue to tell Google about how to crawl the site best.

Before you can use these functions, a bit of setup is necessary.

1. Adding a Google Search Console Property

You first need to add your website as a property in your Google Search Console account.

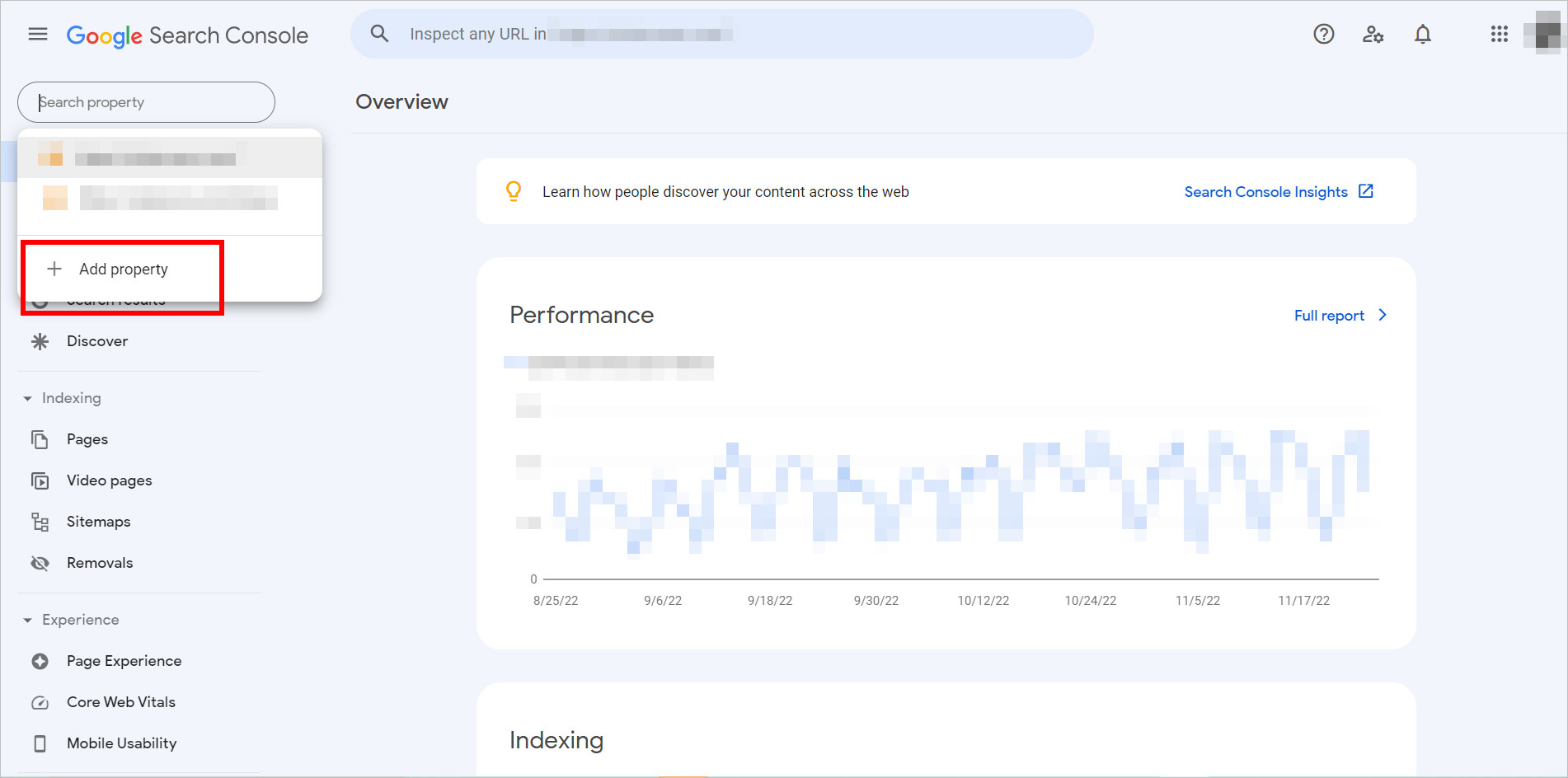

Open the property selector dropdown and click on “+ Add property “:

The property selector dropdown can be found on the upper-left corner of any Search Console page.

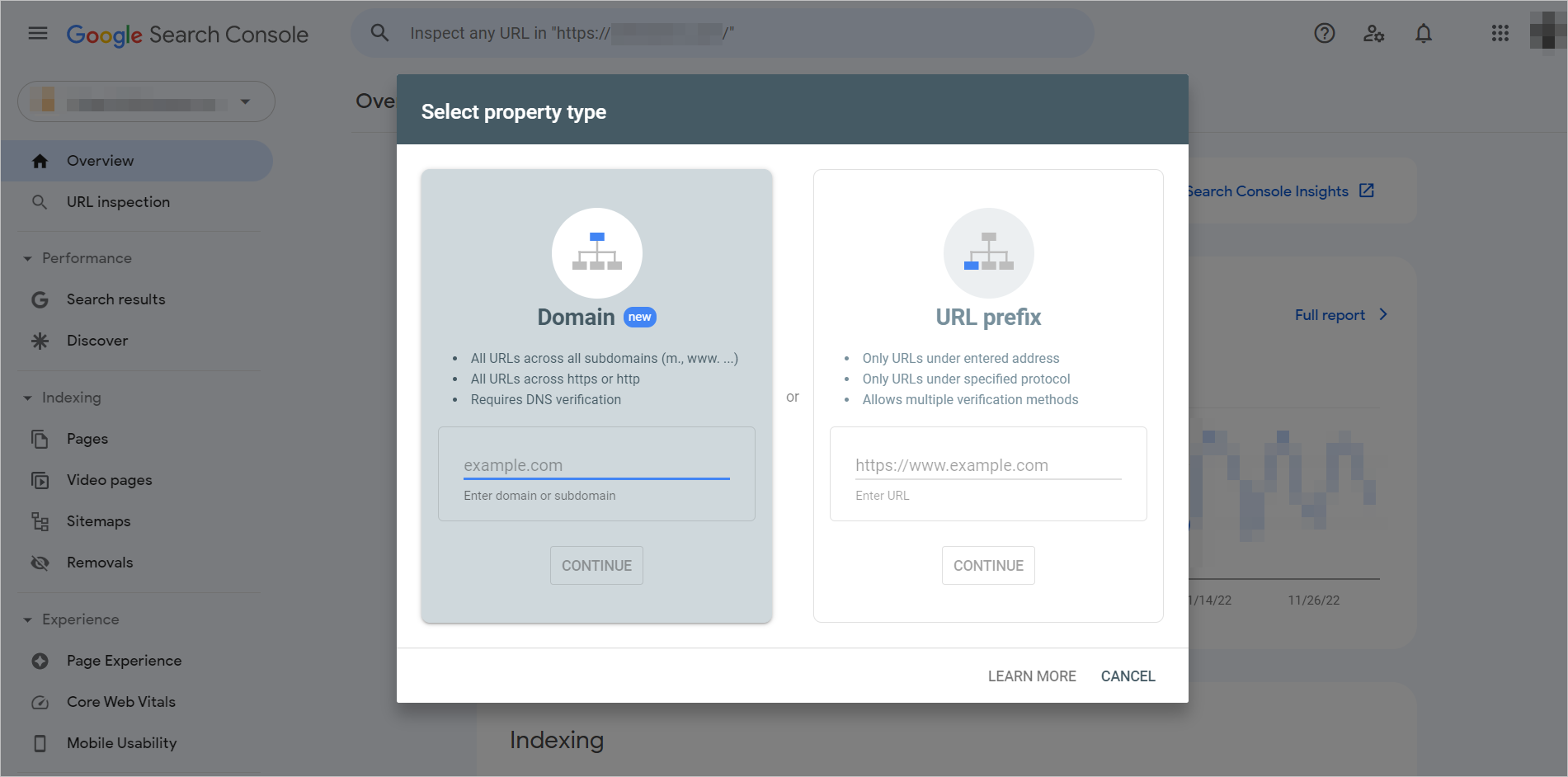

A popup modal with two options will appear for you to verify website ownership. You can choose whether the property you’re trying to add is a domain or a URL prefix:

The “Select property type” modal shows “Domain” and “URL prefix” as options.

On the popup, choosing the Domain property type is described as follows:

- All URLs across all subdomains (m., www. …)

- All URLs across https or http

- Requires DNS verification

On the other hand, the URL-prefix property type indicates the following:

- Only URLs under the entered address

- Only URLs under specified protocol

- Allows multiple verification methods

If you’re comfortable with and have access to the domain configuration for your site (e.g., GoDaddy.com, Name.com, etc.), you can verify using the domain method.

For example, you’ll need to login to GoDaddy.com and provide Google Search Console access to verify ownership.

Otherwise, you can take the URL prefix route.

Verifying Google Search Console Through URL Prefix

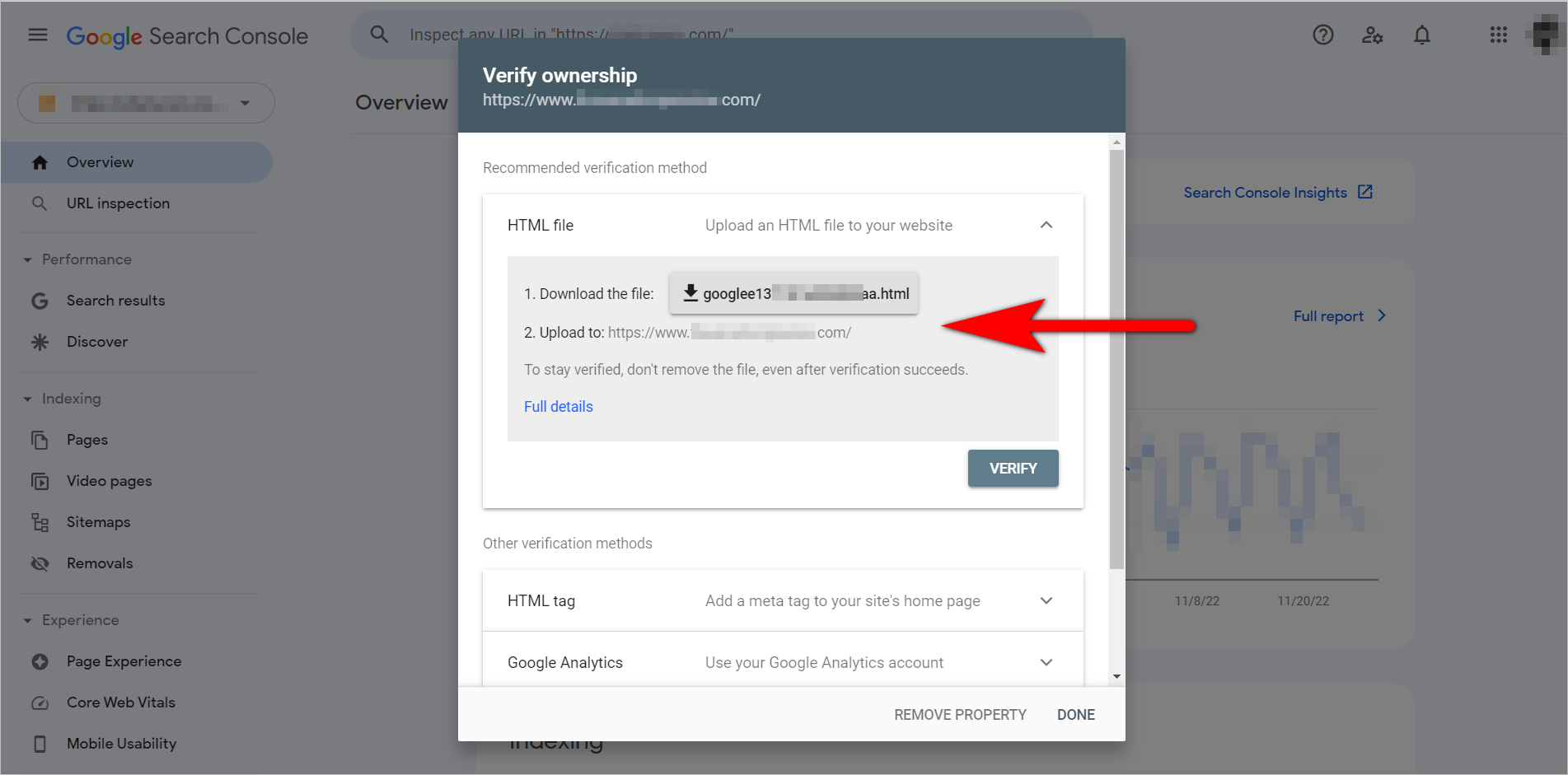

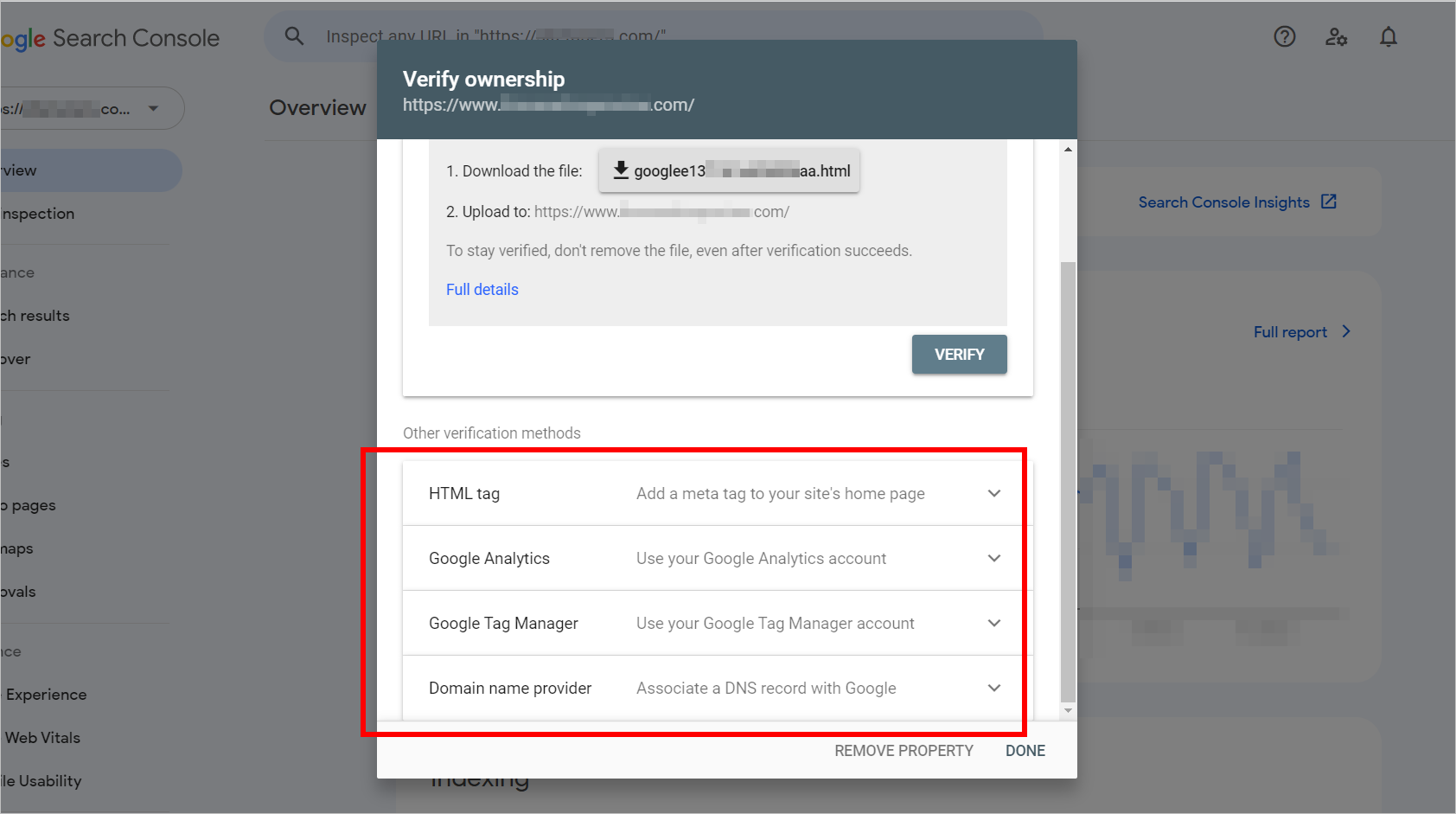

After you input your entire URL prefix in the “Select property type” popup modal, the “Verify ownership” modal will be displayed.

The modal shows 5 ways to activate Google Search Console through the URL prefix method.

One way is by adding a file to the root of your site. You’ll need to download the auto-generated file from GSC and upload it to your website:

The first section of the “Verify ownership” modal indicates that uploading an HTML file to the website is the recommended verification method. The section has a 2-step instruction telling the user to download the file and upload it to the URL they inputted in the previous popup.

Or you can verify by using one of the 4 items below:

The second part of the modal shows other verification methods. It lists the following methods with their corresponding instructions: HTML tag (“Add a meta tag to your site’s home page”), Google Analytics (“Use your Google Analytics account”), Google Tag Manager (“Use your Google Tag Manager account”), and Domain name provider (“Associate a DNS record with Google”).

1. HTML Tag

You can add a small script on your tag and let Google check that it exists, and you can verify ownership that way.

2. Google Analytics

If you also manage the Google Analytics (GA) account, you can use GA to confirm that you own the GSC presence for the site.

3. Google Tag Manager

If you also manage Google Tag Manager (GTM) and use the container snippet, you can use that to verify ownership of GSC.

4. Domain Name Provider

You can sign in to your domain name provider and insert a text record into the DNS configuration.

As soon as GSC verifies that you own the site, it collects and reports data about search terms and crawl rates.

Once you verify that it’s your site, you can also create paths without additional verification. So, if you have registered and confirmed that you own www.example.com, you can create an account for www.example.com/blog without using one of the five methods mentioned above. The www.example.com/blog path will already be pre-verified.

2. Google Search Console Basics: Learning What People Are Searching For

Arguably the most crucial feature of GSC is the ability to check what search terms people use to get to your website. This and on-site search and survey data can tell you about user intent.

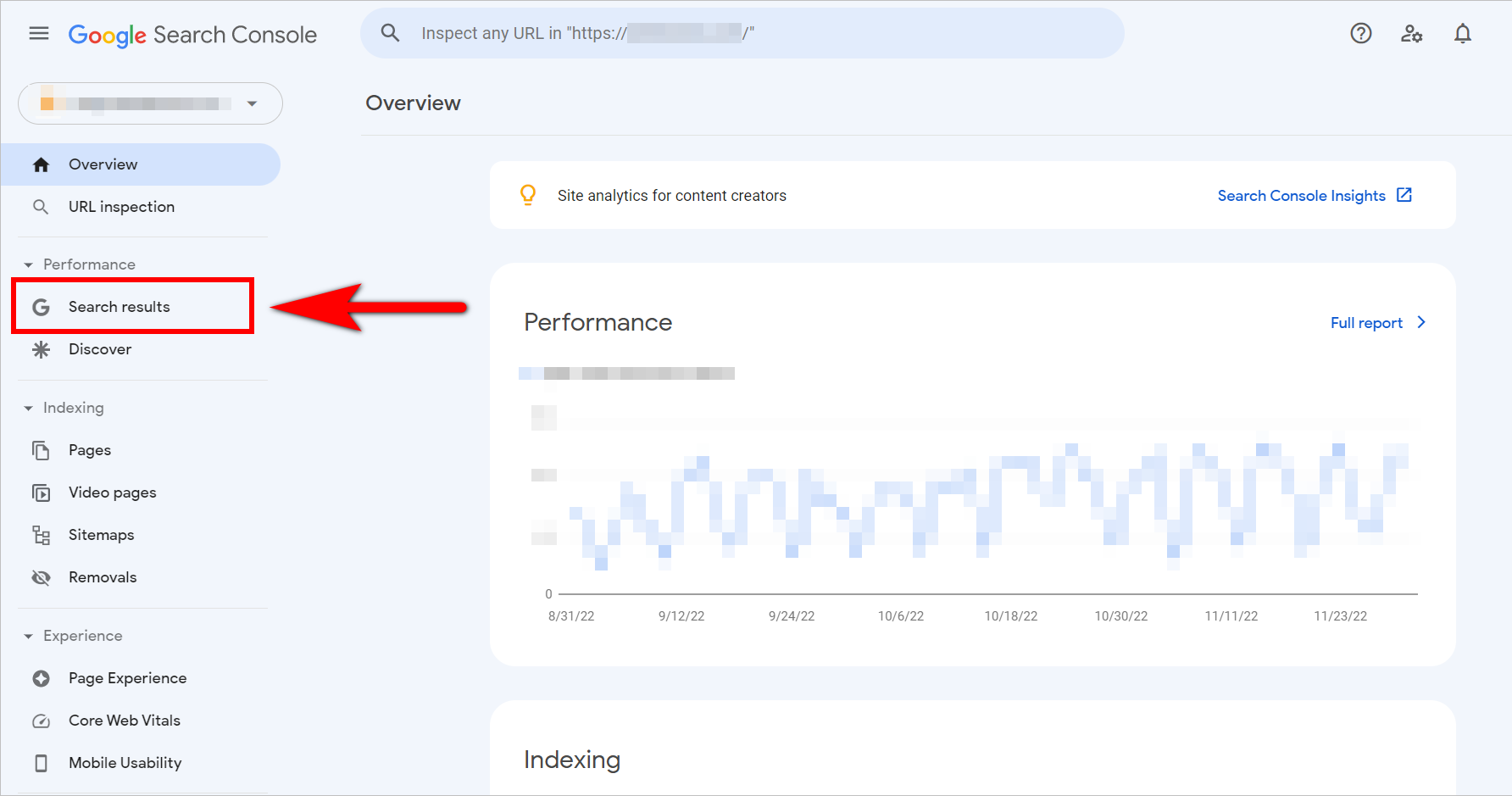

Within Google Search Console, you can go to Performance> Search Results to see the clicks, impressions, click-through rate, and position of the search terms.

Check what search terms people use to get to your site by clicking on “Search results” under “Performance” in the left panel of Google Search Console.

If you’re Samsung, for instance, you might get search analytics data like this:

| Queries | Clicks | Impressions | CTR | Position |

|---|---|---|---|---|

| samsung | 2,524,415 | 6,010,512 | 42% | 1 |

| samsung tv | 311,666 | 842,341 | 37% | 1 |

| samsung gaming monitor | 21,644 | 76,479 | 28.3% | 1 |

| 8k tv | 6,731 | 54,281 | 12.4% | 3.4 |

| front load washer | 891 | 68,523 | 1.3% | 8.2 |

Here’s what those terms mean:

- Queries – the actual search terms people use to search on Google

- Impressions – the number of times a page on your site appears for the given terms

- Clicks – the number of times people click on a result on your website for the given terms

- CTR – the click-through rate, or the percentage of clicks relative to the impressions

- Position – the average Google position of your pages for the given terms across all countries

Why is it important to identify what people are searching for?

That data is helpful for a slew of different functions:

- You can check if your educational pages are driving early-stage terms to your site and see how effective your top-of-the-funnel efforts are.

- It allows you to see what terms people are typing in when they either know your brand or are looking for particular things and are likely to be at the bottom of the funnel.

- You can see which terms in your space you don’t have high enough Google positions for and can target those terms with new pages you create.

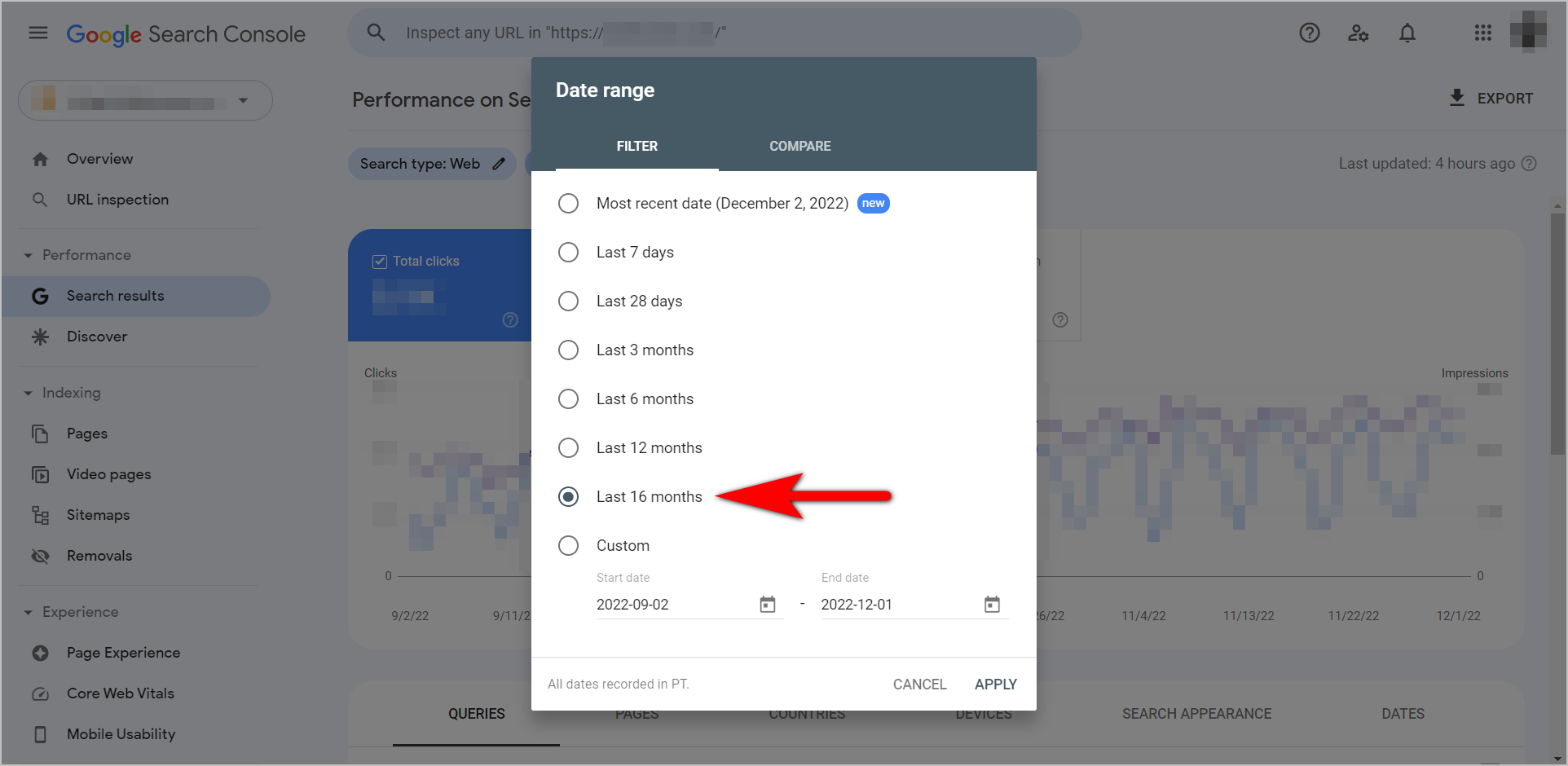

How far back can marketers see data on Google Search Console?

The old version of this tool – Google Webmaster Tools – allowed users to see only 3 months’ worth of data. Google Search Console, by contrast, allows users to check up to 16 months’ worth of keyword data.

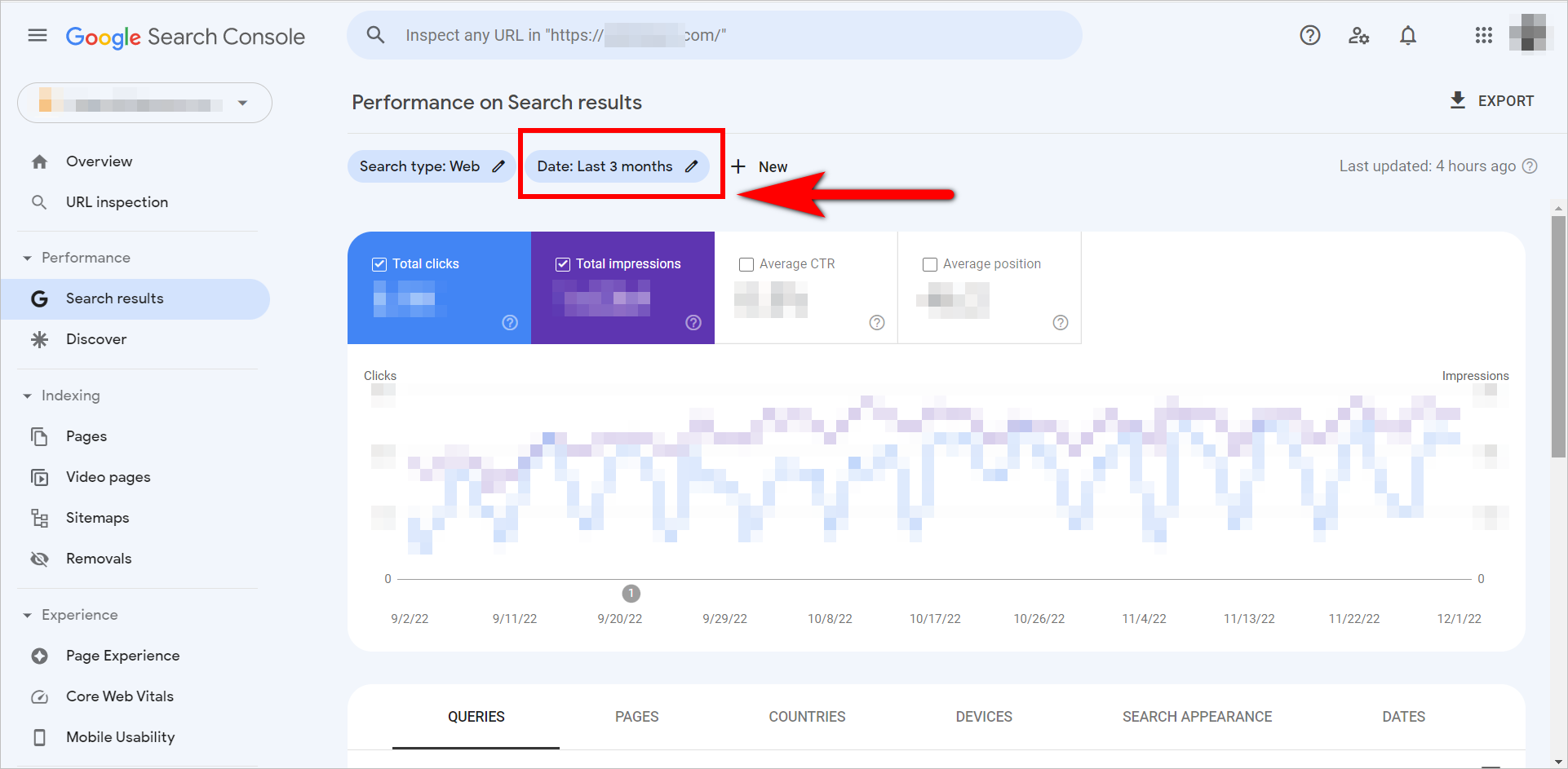

You need to click on the date coverage near the upper-left side of the page and change the date range as needed:

The Performance on Search results page has filters at the top: search type is set to the web, and date is set to last 3 months by default. Users can also click on “ + New “ to add filters.

The date range modal pops up when the date filter is clicked.

3. Letting Google Know What to Crawl First

Google’s spiders will crawl your site whether or not you have a sitemap. However, you can tell Google which pages you prioritize when you submit a sitemap via Google Search Console. Google has a limited amount of crawl time dedicated to each site. And to make the most of that crawl time, you’d want to tell Google which pages you think the spiders should crawl first.

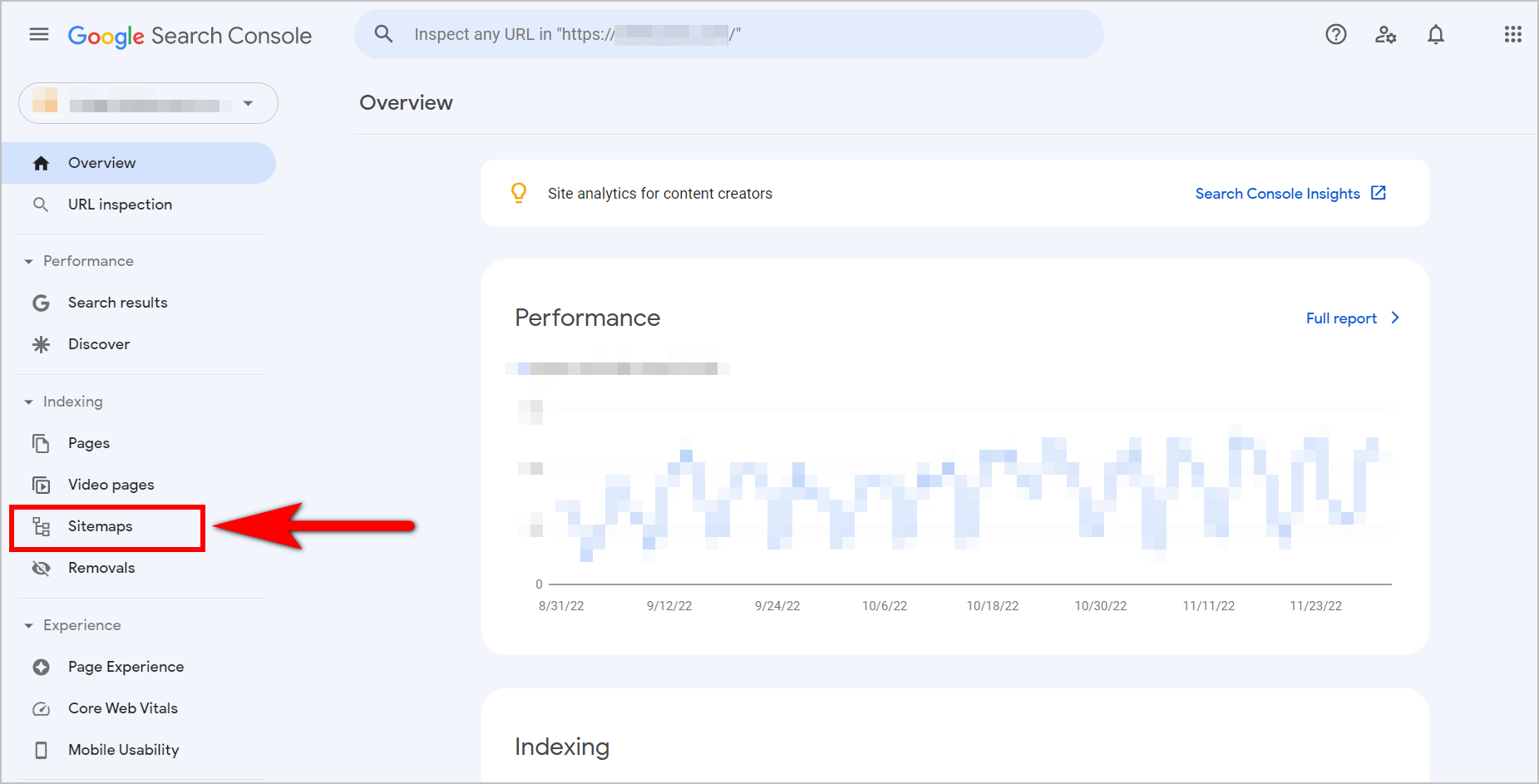

To start that process, go to Indexing> Sitemaps:

“Sitemaps” is under “Indexing” in the left panel of Google Search Console.

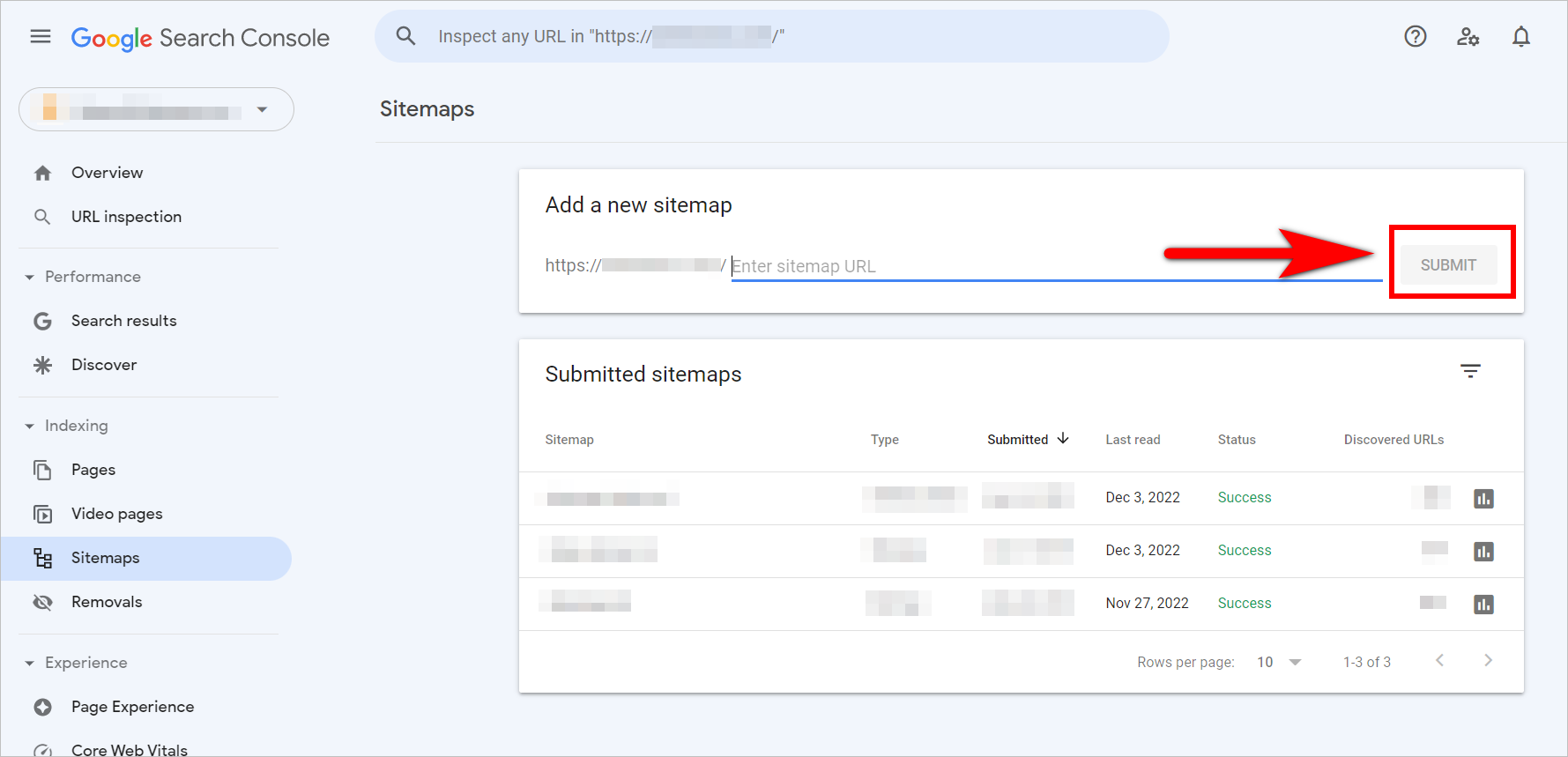

Once you’re there, you can add a sitemap by plugging in the location of the sitemap and then clicking on SUBMIT:

Add a sitemap by entering your sitemap URL in the bar and clicking on the “Submit” button beside it.

If you can build an XML sitemap that updates periodically, great, you can submit that. However, even if you don’t have that, you can still upload a basic text sitemap somewhere on the root of your site and submit that location to GSC.

If you’re using a text sitemap, remember to save it in UTF-8 encoding with the format below:

www.example.com/url1

www.example.com/url2

www.example.com/url3

Once you’ve submitted the sitemap to Google, you can wait a while and then check how many of those pages Google has crawled. That should give you a pretty good idea about whether or not you are getting your most important pages seen by Google.

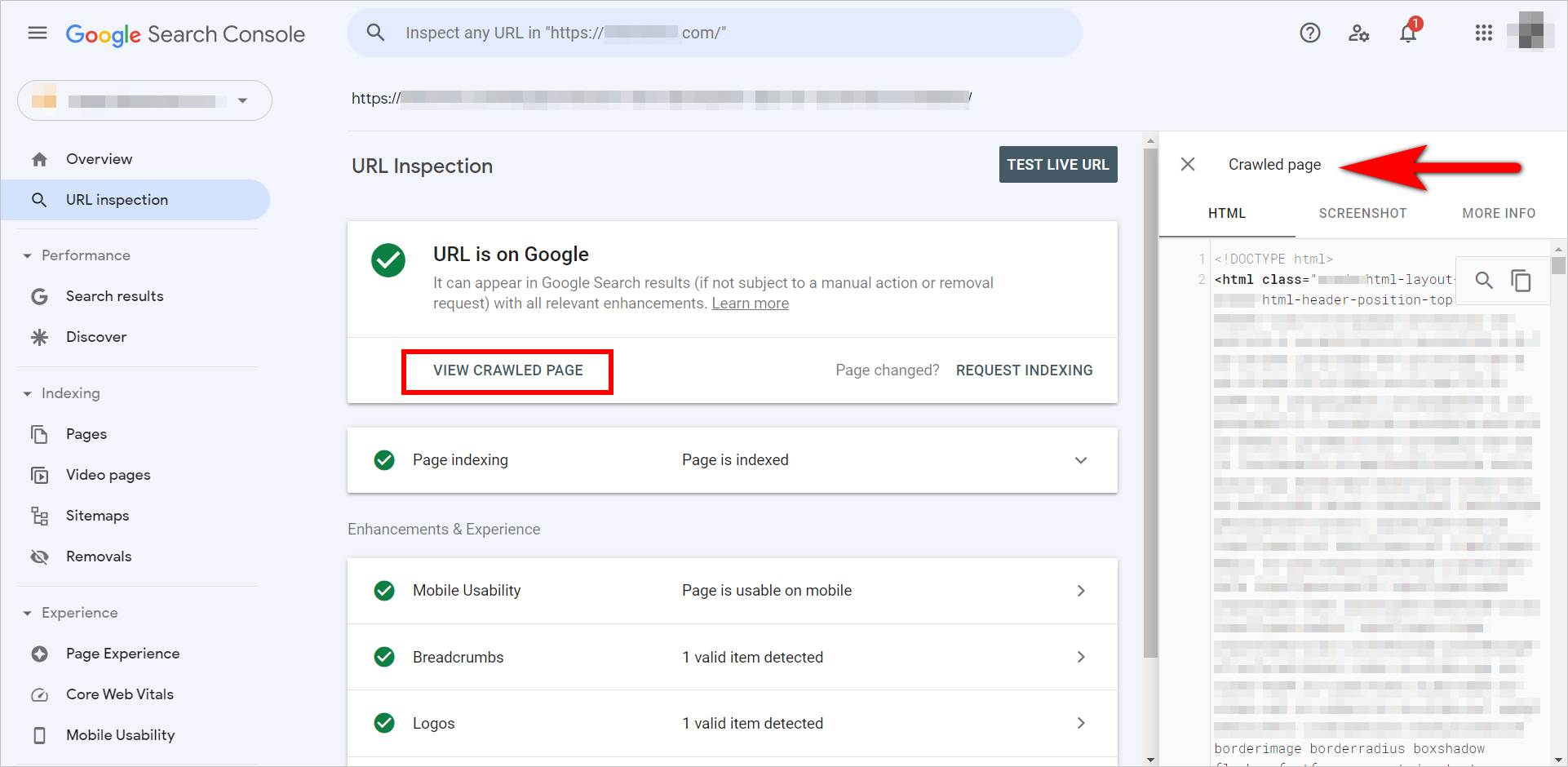

4. Google Search Console Basics: Checking If a Page Can be Indexed

Sometimes, for particular pages, you’d want to check if Google has issues viewing the page, seeing the HTML on a page, and rendering your pages correctly. For those kinds of problems, GSC has a feature called URL inspection.

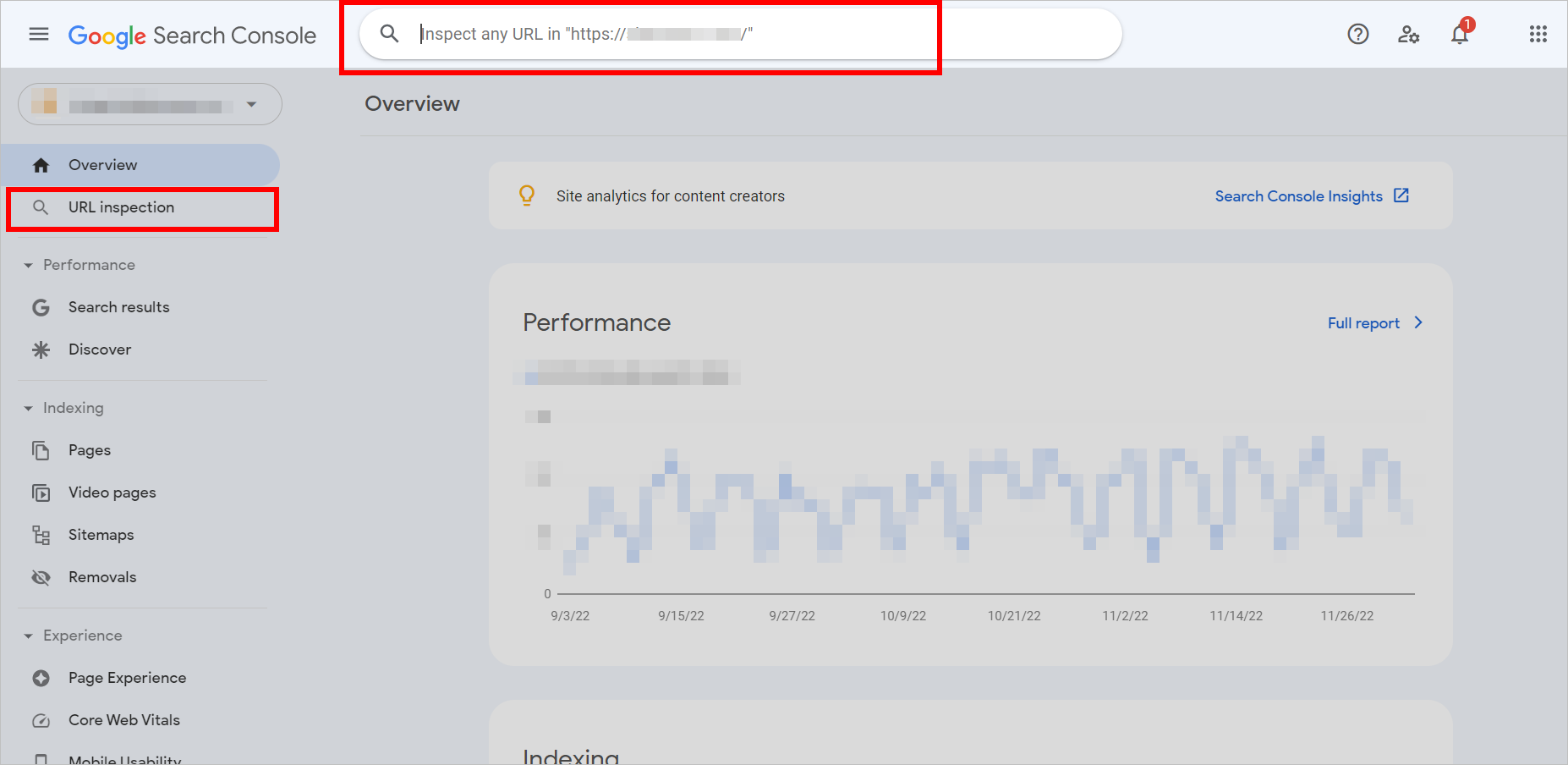

There’s a “URL inspection” link on the left panel of GSC, but you can directly type in the URL you want to inspect in the search bar at the top.

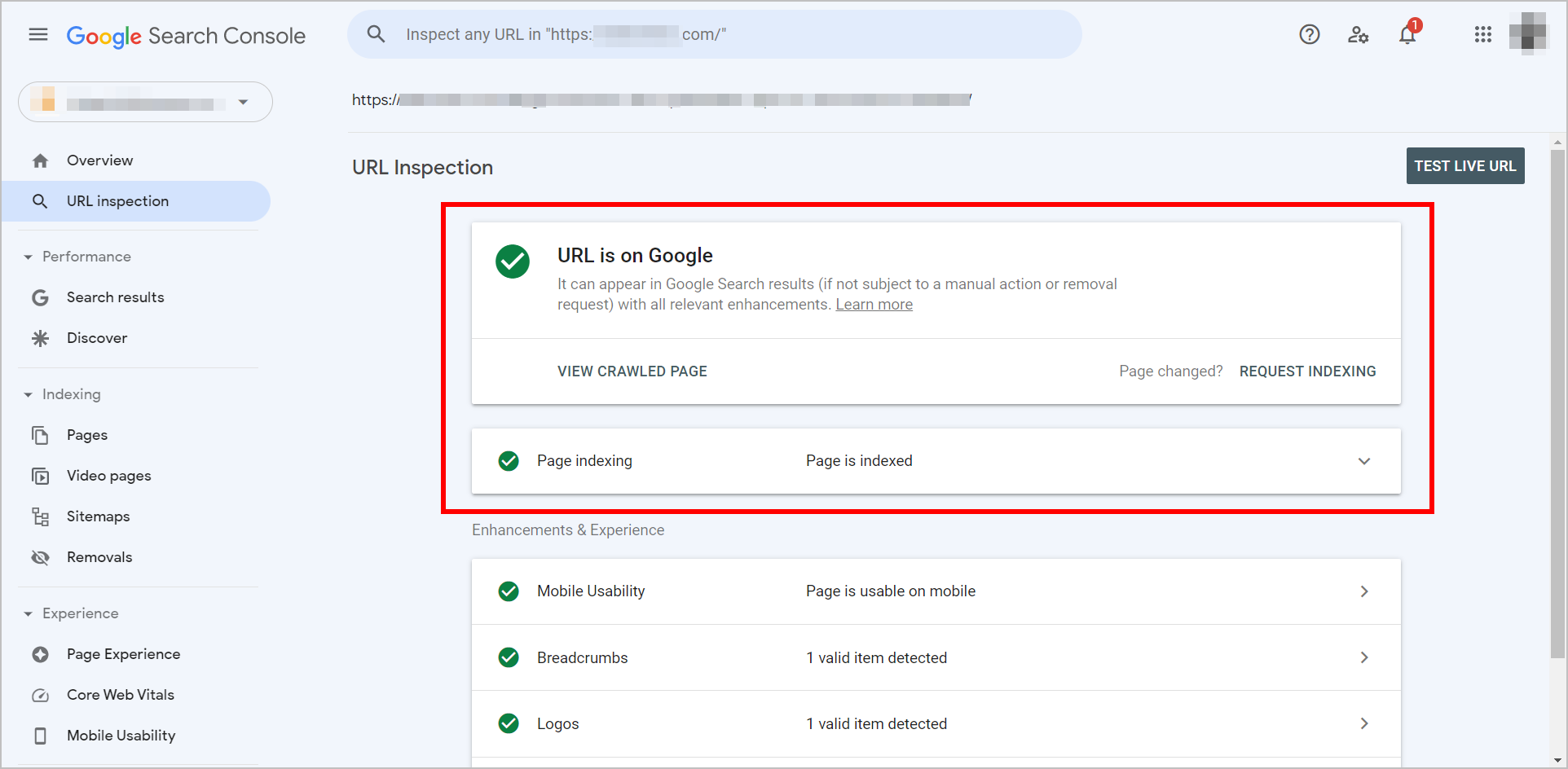

Once you plug in a URL to inspect, Google will tell you whether its spiders can crawl and index your page:

The URL Inspection page will indicate that the “URL is on Google” and that the “Page is indexed” if Google spiders can crawl and index the page.

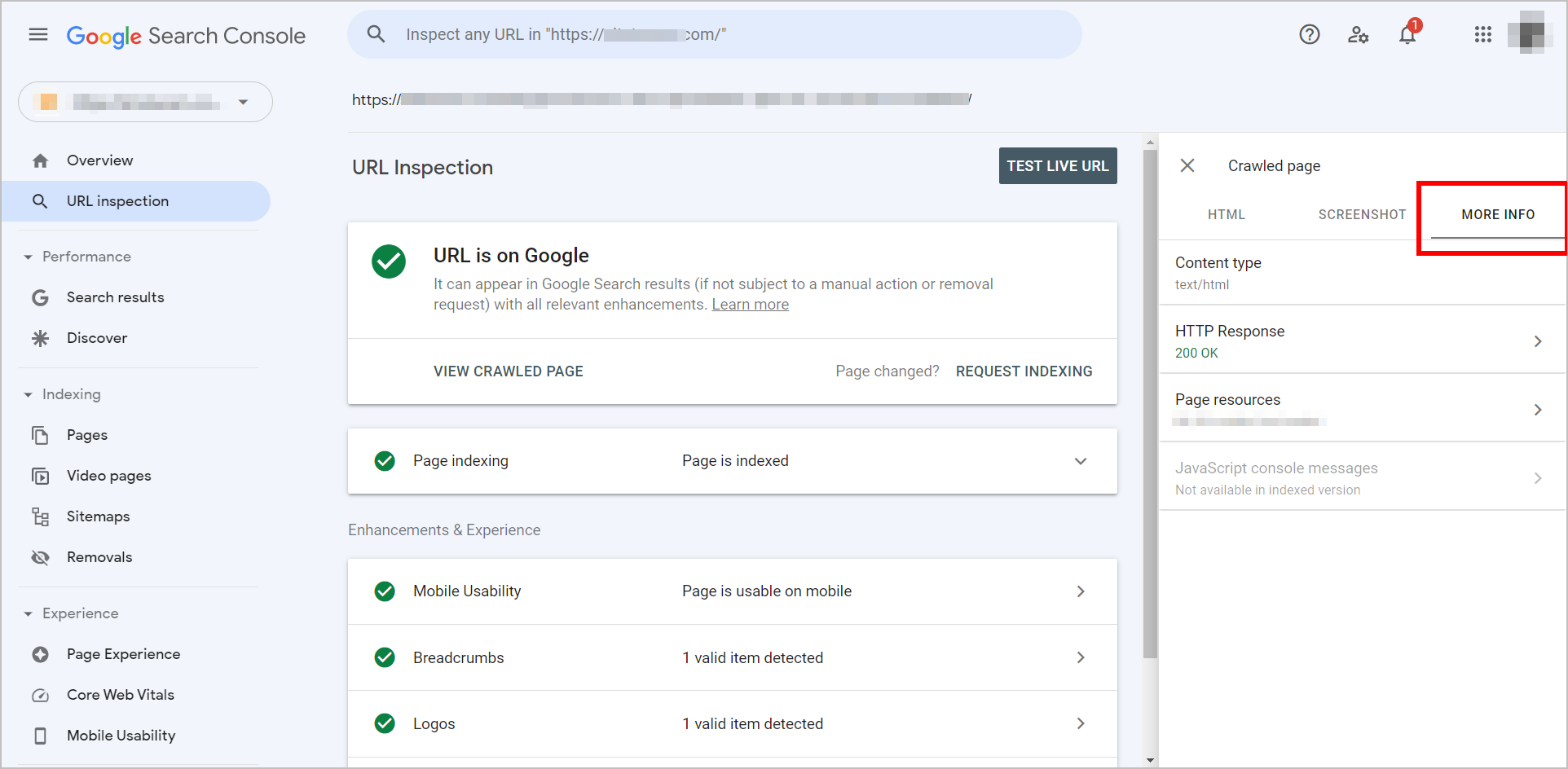

You can click on VIEW CRAWLED PAGE to check what Google sees in the HTML, what the page looks like to Google, and more information on the page like the HTTP response:

Clicking on “View Crawled Page” launches the “Crawled page” panel on the right. It has 3 tabs: HTML, Screenshot, and More Info.

The “More Info” tab of the “Crawled page” panel includes information like the HTTP Response.

It might help you identify whether you have pages to fix.

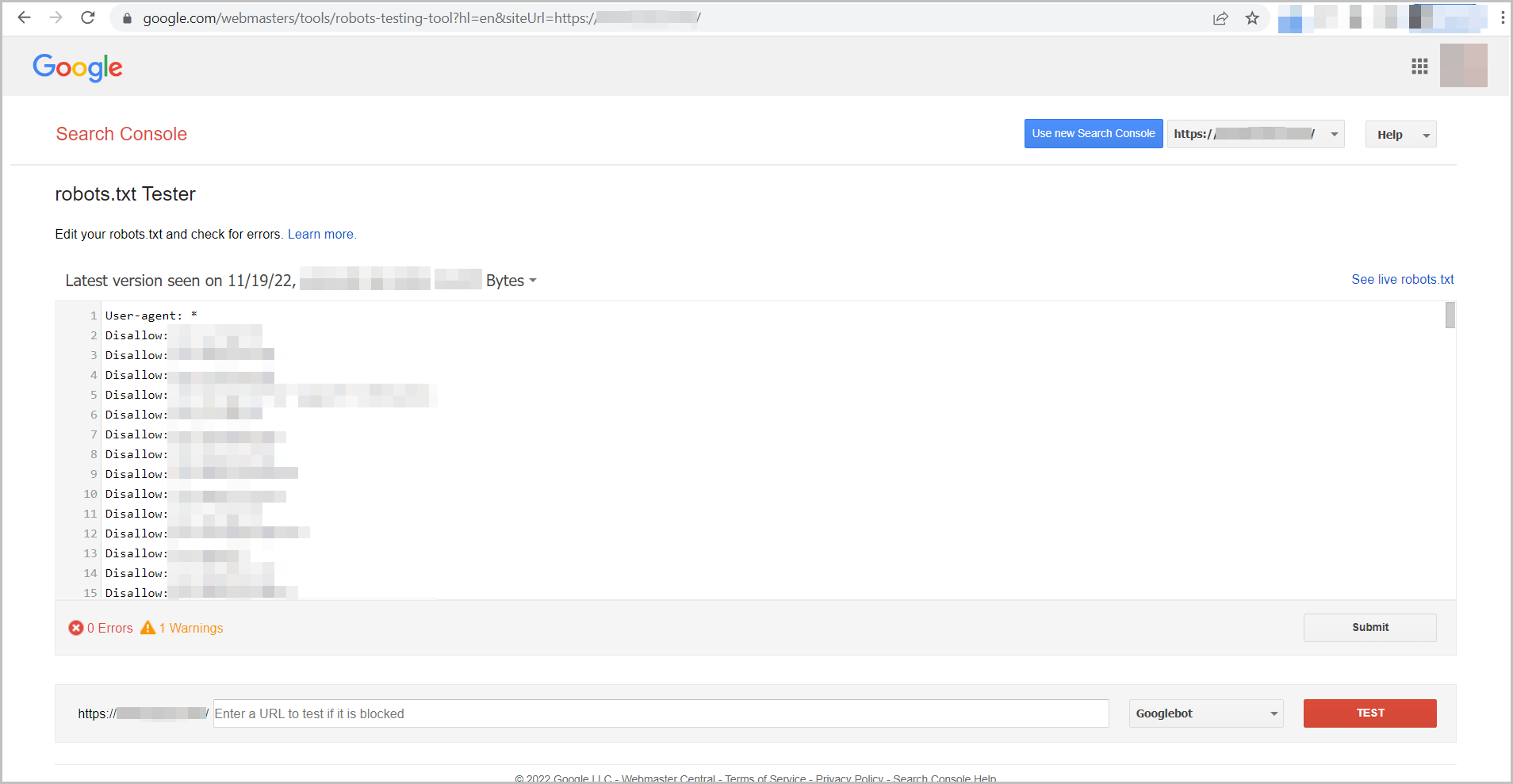

5. Checking Blocked Pages

There might be pages on your site that you don’t want Google to waste its crawl time on. Since Google’s crawl time is limited, you’d like it to spend time crawling your most valuable pages or at least your valid pages.

You probably don’t want to get a few page types crawled:

- Your 404 page

- Search strings with parameters

- Anything protected by login/account information before a user can access the section

For those types of pages, you’d want to exclude all of those via a robots.txt file.

You can check whether those exclusions work under a legacy section of Google Search Console.

It will look older than the rest of the interface and no longer be linked to the new platform. However, it will allow you to check if the URLs you want to block are indeed blocked:

Robots.txt Tester indicates that you can “Edit your robots.txt and check for errors.” The tool shows conditions about sections on the website and whether they can be crawled. Google has been instructed not to crawl areas marked with “Disallow,” followed by a string of the website section.

Conclusion: Understanding Google Search Console Basics

Google Search Console is a powerful tool if you know what you’re looking for.

Once you’ve verified your account, ensure you’re using at least the essential functions that make the tool worth the hassle of verifying:

- Review the search terms people use to get to your site and learn intent data for the different parts of the funnel.

- Submit a sitemap to Google, and check how many of your most important pages are indexed.

- Inspect URLs to check how Google views your pages.

GSC is pretty handy when you know exactly where to dig.

This post was originally published in June 2018 and has been updated to reflect the interface of Google Search Console in 2022.